AI is now on your computer.

Nvidia has announced it will release a tool aimed at users of its 30x and 40x GPU series. The new tool will allow users to run AI-powered chatbots locally on their systems. This signals a new and more direct use of Artificial Intelligence that will significantly enhance the user experience. You can now run a language model locally on your PC. The ‘Chat with RTX’ demo app is already available to users at the time of writing.

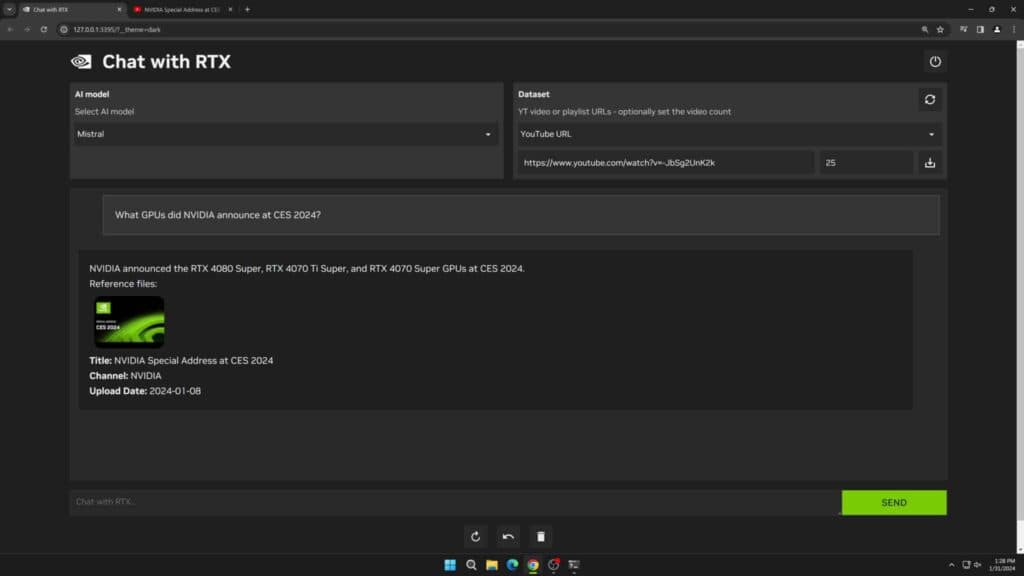

‘Chat with RTX’ is a custom generative AI that lets users personalize a chatbot with their own content. It uses retrieval-augmented generation (RAG), NVIDIA TensorRT-LLM software, and NVIDIA RTX acceleration. RAG is a technique for enhancing the accuracy and reliability of generative AI models with facts fetched from external sources.

The tool supports various file formats, including .txt, .pdf, .doc/.docx and .xml. Point the application at the folder containing these files, and the tool will load them into its library in just seconds.

However, potential users should be aware of the tool's limitations. Chat with RTX cannot remember context from previous questions, which could affect the relevance of its responses. Moreover, the quality of the responses may vary based on several factors including the phrasing of questions, the performance of the selected model, and the size of the fine-tuning dataset. Despite these limitations, Chat with RTX represents a significant step towards running and customizing AI models locally, offering more privacy and efficiency than cloud-hosted models.

This is an important and big step ahead for Artificial Intelligence usage as it allows users to connect their local files as sources for AI language models. But for many users, the confusion lies in the generative AI model's use case.

Where can you use the AI powered chatbot?

Users can use the AI-powered chatbot with YouTube videos and to scrape transcripts from YouTube videos. While there are some reports of this feature being particularly less impactful, and in some cases, outright wrong, this might change in the future.

The official announcement mentions using YouTube video links to serve as source materials for AI to generate related content. For example, users can input links from their favorite channels to generate recommendations for future plans.

Perhaps the biggest benefit of using a generative AI that runs locally is the focus on privacy and speed. With local files access and the lack of necessity to go online, Chat with RTX has significantly faster turnaround times. It will also ensure there is no necessity to share information online or with a third-party service.

What are the requirements for Chat with RTX?

Chat with RTX is limited to only a certain number of GPUs and devices. At launch, Chat with RTX is available on

- GPUs: GeForce RTX 30 Series or higher

- Windows 10 or Windows 11

Nvidia has also announced an NVIDIA developer contest running through February 23. Developers can enter a generative AI-powered Windows app or plug-in to the generative AI as part of this contest. Prizes include a GEFORCE RTX 4090 GPU or even a pass to attend the NVIDIA GTC and more.

Stay tuned to esports.gg for the latest esports.gg news and updates.